Reclaim your traffic by fixing hidden issues

Are you worried you’re wasting lots of your business’s digital marketing budget on Search Engine Optimisation without getting anything to show for it?

Need to find out if there are hidden flaws in your site that keep your web pages from ranking high in Google’s results?

What if I told you that SEO doesn’t need to be confusing or expensive?

It sounds highly unlikely, right?

But ask yourself this: how many times have you tweaked your site’s code or invested in publishing more content, but found your pages remain nowhere to be seen on the first page of Google?

Have you ever wished that you could uncover what the problem is without having to resort to paying for a full website SEO audit? Or worse, paying for your team to learn the finer details of search marketing?

If you answered yes to the above questions, then read on to discover the most common errors SEOs discover that stop your site ranking high in Google.

You’ll also find out why today really isn’t the day you should go out and buy a lottery ticket. Mark my words…

In this article, you will learn…

- The main ingredient Google needs to rank your site in its results pages

- The biggest problem Google has when efficiently indexing your website

- How you can tell Google which keyword phrases you want to rank for

- Why the mobile version of your site matters more than your desktop site

- How web designers accidentally trash site rankings and kill businesses

So Google, what exactly is your problem?

So your brand is ready to hit the big time – you have created your site, launched it, populated it with heaps of content, held your breath for a few months (or even years) and now you are just waiting for visitors to start flocking in.

Looking at your site on paper, you have everything you need to rank highly on Google’s search results – rich and engaging content, social media shares, incoming links from other sites, and so on. However, your site’s search engine rankings are still poor and as a result, you are getting far fewer customers than you had hoped. So, what is going wrong?

When it comes to search engine optimisation, the tactics seem pretty straightforward.

However, the process of optimising your site is far more complex, and it is very easy to miss some SEO errors that may not be immediately visible. These hidden SEO errors are most likely the main cause of your poor Google rankings.

Below is a look at 5 of the most common SEO errors web designers and content editors are often blissfully unaware of – errors you should avoid at all costs.

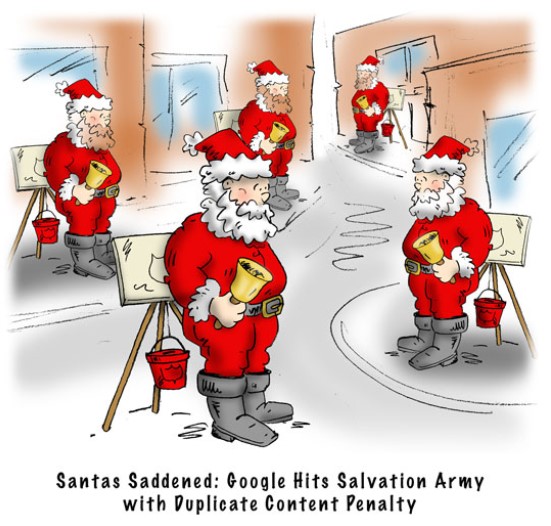

1 – Duplicate content URLs

Duplicate content is a big no-no when it comes to Google, and you are likely to receive harsh penalties that will affect your rankings if your website has duplicate content.

The fact is that Google is super efficient at making money. It is just as good at saving money, and because one of Google’s biggest expenses is crawling web pages, the search engine is totally opposed to wasting ‘crawl budget’ on content it has already seen elsewhere on the web. Even if it saw that same content on your own site (at a different URL).

Unfortunately, duplicate content is usually unintentional and occurs due to content editors being unaware they are creating heaps of weak content every time they create new blog tag pages. Web developers often create a duplicate version of every single page of the site when the incorrectly change a site’s URLs from HTTP to HTTPS or from www to non-www.

Other duplicate content issues are created by content management systems such as WordPress, which create a page for every image you upload, a page for every date in the blog archive, one for every author… and the list goes on.

All of these extra URLs with weak or duplicate content are created unintentionally and most web editors are totally unaware that they have effectively diluted Google’s index of your site and wasted precious crawl budget.

Nonetheless, Google doesn’t care much about intentions when it comes to SEO errors, and they will still penalise you. I mean, how is Google to know which URL is the correct one to rank in the search results? It doesn’t, and it can end splitting your ranking power between multiple URLs, leaving none of them in the search results.

Therefore, after you have launched your website, you (or whoever is responsible for digital marketing) should monitor it regularly using tools such as Google Search Console or pro tools such as SEMRush or Ahrefs to identify duplicate content issues as soon as they occur.

The best way to eliminate duplicate content is to not create it in the first place. Stop over-tagging blog posts, no-index those pages using disallow rules in your robots.txt file, or no-index them using plugins such as Yoast, if your site is built using the WordPress platform.

2 – Poor and lean content

You know you are not allowed to just steal content from other sites, right?

I mean, it sounds obvious but many business owners would, and should, be horrified if they discovered that their content writers or web editors had simply pinched and reworded an existing page from elsewhere on the web.

Still, it happens.

Let me ask you a question…

When was the last time you ran your site pages through a tool such as Copyscape – which finds other content on the web that looks suspiciously similar to your content?

Google is in the business of organising the world’s information, and you can bet your last dollar that they know where they spotted a block of content first.

Just as bad for your search engine results page rankings is content that is weak or offers little value to the reader. Indeed, there is little point posting an article that has less than 200 words, as Google knows that doesn’t give your site visitors much value.

Again, caught by Google Panda algorithm penalties is content such as your contact pages, lookbooks, galleries and any product pages that have a low word count. You need to populate those pages with unique, informative content, ideally, thousands of words of content.

Can’t be bothered to give your web pages visitors value? Then it is better to no-index those pages so Google knows not to allow them to dilute its index of your site’s pages.

Again, you can fix this by running the site through pro SEO tools and either improving or disallowing those pages from being added to Google’s index. You will be ensuring the search engine doesn’t split your ranking power between similar pages, and also helping your visitors enjoy a better user experience.

3 – Non-optimised meta titles

Unknown to most web editors and content writers is the fact that you need to be super specific about the keyword phrases you are trying to rank your web page for.

That’s right, targeting a phrase such as “flats for sale” is mightily different to targeting “flats for sale in London” and “1-bed flats for sale in London” and of course, “1 Bed flats for sale in London SW1”. Not only are these phrases different to each other, but they are also related to search queries that differ greatly in the amount of search volume and competition.

With the above in mind, it’s always wise to use a pro SEO tool or just use Google’s suggested keywords (seen in the drop-down in the search box and at the bottom of the search results pages) to decide exactly which keyword phrase you want to optimise your page for.

Alternatively, you can use specialist tools for keyword research. One of my favourites is SEMScoop Keyword Tool, a smart out of the box keyword research and SERP analysis tool for digital marketers, bloggers, and small businesses who are interested in improving their site’s overall ranking. It can help find true ranking opportunities & low SEO difficulty terms to create smart and competitive contents.

If your web editor or digital marketing manager doesn’t use pro SEO tools and can’t tell you what phrase each page of your site is optimised for – you’ll need them to hire an SEO, because they are not up to the job.

Once you know which phrase you want your page to rank for, then you need to make sure that phrase and related synonyms are included in the page’s meta title, and content. That’s right, you can’t just rely on your e-commerce platform or content management system to create automated meta title for each page. It needs to be written to include your target keyword phrase AND qualifier keywords (such as buy, online, shop, free, best, etc) in order to qualify your page to rank for other longer search terms your market is searching for.

Once you have listed the target keyword phrases for each page of your site in a spreadsheet you’ll want to make sure variations of that phrase are used in page headings, subheadings, paragraph content, image file names, image alt tags and importantly, as the clickable anchor text used in internal links around your site.

Try it now! Ask your web editor what search query (keyword phrase) a certain page is targeting and then check you see that phrase, and variations of it, in the metadata and throughout the content. If you had the content written to help increase visibility in Google and it isn’t optimised for a phrase you want to target, you’ll need to tell them they are not doing their job properly, or at all.

4 – Slow page loading speeds

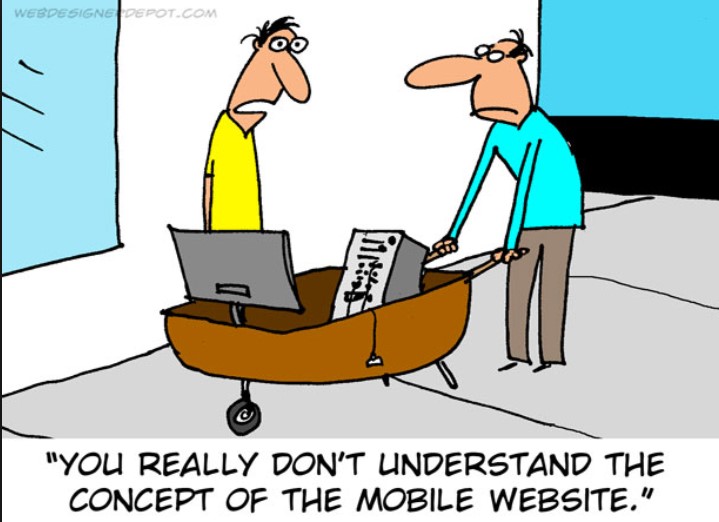

Slow loading web pages are another common and hidden SEO error that might be causing your site to receive poor Google search rankings. Indeed, heavy web pages are dropping out of the search results faster than hot cakes – since Google started ranking pages using the mobile version of each page via their recent change to mobile first indexing.

Because many web designers and online writers are not up to date with Google’s repeat warnings and recommendations about the importance of keeping page load speeds low, many businesses are losing rankings, traffic and money every day.

Worse is that when marketing managers or business owners visit the web pages their team have published, they see the quick-loading cached version of the page – rather than the slow loading page that new visitors view.

Add to that the fact that your staff likely view your site on a laptop or desktop computer rather than a mobile device (which is the device type most of the web’s traffic is viewed on) and you’ll be blissfully unaware of the bad experience your pages cause your visitors to suffer.

Get this… Even if you don’t care about your site visitor’s user experience – Google does, and has likely already penalised your slow loading pages out of the top results to ensure THEIR users don’t have to suffer these flaws.

Slow page load speeds are usually caused by huge images and graphics that your content editor or blogger added to the page without resizing them first. Photos taken directly from cameras are notorious offenders because their actual dimensions are much larger than the webpage and they have a resolution of 300 rather than the 72 pixels per inch that web browsers use.

So the file size of each image may be… 10 or more times larger than it needs to be. Try waiting for that to load up on a mobile device without bailing out and going to a competitor site instead.

Host servers with poor performance, too many plugins on your site, badly coded web pages (just right click on your page and hit ‘view source’ to see all that extra silly code that rubbish ‘website builder’ toys like WIX and Squarespace sites create). Bless them.

Ok, so it may be a while before you move onto a proper content management system if somebody cursed you with a site created with one of the website builder tools mentioned above, but if you want to be sure you are not missing out on all that traffic and revenue you had your heart set on, monitor your website regularly with tools such as Web Page Test or even the page speed report in Google Analytics. And if you’re unsure if your site has hidden technical issues, be sure to run though a comprehensive SEO audit checklist or hire a professional SEO auditor.

5 – Broken incoming links

Links, just like content, are another of the most important factors used by Google’s search algorithm. Ok, that is an understatement – incoming links are still the most important ranking factor your web pages need in order to outrank competitors.

Sure, if there is low competition to rank in the top slot for a keyword phrase, you can achieve this without any incoming links from other sites. For more competitive search terms (you know, the ones that make money for businesses via sales) you will need to power your pages up with links.

A whole lot has been said about the different ways of acquiring links from other sites, and that subject is beyond the scope of this article.

There are lots of different types of links you can get, each of various value to your SEO campaign. These include easy-to-acquire links such as those from your social media profiles and directory links, guest posts and links from partner and client sites. Hard-to-acquire incoming links are earned from other sites who genuinely want to tell their readership about your content.

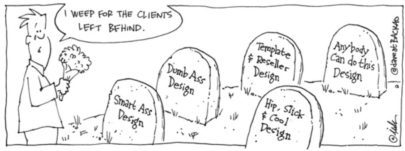

However the most common mistake that trashes a site’s Google rankings is totally avoidable – have you made it? Wait for it… the usual suspect, well-meaning web designers and developers who replaced your old site with a new site – and changed all the URLs!!!

Don’t laugh, it seems so obvious to them as soon as you tell them that the old site had built up heaps of ranking power from incoming links to your old page URLs. And they usually recoil in shame when they realise they are the reason you have lost thousands of pounds’ worth of sales since they launched your new site – then, of course, the well-meaning chaps jump into defensive mode by claiming that it was not part of the spec list.

So, whenever you put the fate of your business’s income and profits in the hands of a “designer” rather than someone who is concerned with your sales and marketing, be sure they crawl your old site before it is replaced, and redirect all those old URLs to the new URLs the minute the new site is launched.

To be fair, the number of businesses that died due to trusting inexperienced web designers shouldn’t really be attributed to the designers, after all, you hired them because they like pictures and images.

And that’s good, let them draw nice pictures and leave the digital marketing to those who know how to create and monetise online markets.

Oh, and speaking of killing businesses…

I mentioned above that you probably shouldn’t take the risk of venturing out into town to buy a lottery ticket today. Why?

Because you have more chance of dropping dead on the way to the store, than actually winning anything.

Hey, don’t shoot the messenger!

Summing it up

Each of the above hidden SEO errors can result in lower search engine rankings for your website, not to mention a poor user experience that can drive away any traffic arriving on your site – which generates higher bounce rates that cause rankings to tank even further.

By ensuring Google can efficiently crawl your quick-loading pages, find plenty of keyword-rich content on them and assign the ranking power each page deserves, you can be sure most of the search engine’s problems have been resolved.

Fortunately, even though the above issues are usually created by (but hidden from) web designers and content editors who think they are helping your project, it is very easy for SEO specialists to spot them.

Therefore you, or whoever you have put in charge of your site, should regularly monitor your website manually, using (Google Search Console) or via an expert that uses professional SEO tools.

Remember, all that time you put into creating a site and populating it with content could be wasted because you trusted a designer to optimise your pages for search engines. Or because you let an inexperienced copywriter come up with your business’s search marketing. The resultant loss of revenue isn’t really their fault – it’s yours.